2017-35: Misperception, Mistrust & Misinformation

- by Mike Flood

- •

- 07 Dec, 2017

- •

The online public is frequently wrong, sometimes very wrong, about key global issues and features of the population in their country. This is the finding of Ipsos MORI’s latest Perils of Perception survey across 38 countries. Great Britain fares better than most, but we’re still some way behind the leading (best-informed) nations, Sweden, Norway and Denmark. The worst on Ipsos’ Misperception Index are South Africa, Brazil and The Philippines.

In the run up to the Referendum last year, the MD of the Ipsos MORI Social Research Institute, Bobby Duffy, laid the blame for some of the misunderstanding at the door of politicians. In ‘Wondering why Boris Johnson keeps mentioning bendy bananas & Brexit’, Duffy raised questions about why people were getting their facts so confused. “Despite the best efforts of many organisations to present a fair reflection of sound evidence,” he opined, “the picture we’re getting is that the facts are messy and contested. Some things are known and relatively undisputed… But many of us are very wrong on the biggest issues, those that we say will settle our vote: immigration, the economy and sovereignty… First, we need to recognise that people overestimate what they worry about, as much as worry about what they overestimate [for example] we overestimate EU immigration but underestimate our reliance on investment from EU countries… Second, we know that we remember a vivid story much more than dry statistics. Bendy bananas and other extreme anecdotes stick — it’s not a media effect, it’s how we’re wired to think. So it’s not nearly as ludicrous as it may seem for Boris Johnson to come back to bananas — repeatedly.”

Mind Control

This issue of not hearing or believing what other say was explored a couple of days ago by Simon McCarthy-Jones in The Conversation. In social networking sites may be controlling your mind, he asks: “How can you live the life you want to, avoiding the distractions and manipulations of others?” and concludes that to do so we need to know ourselves much better. “Sadly, we are often bad at this,” he observes “By contrast, others know us increasingly well. Our intelligence, sexual orientation — and much more — can be computed from our Facebook likes. Machines, using data from our digital footprint, are better judges of our personality than our friends and family. Soon, artificial intelligence, using our social network data, will know even more. The 21st-century challenge will be how to live when others know us better than we know ourselves… There are industries dedicated to capturing and selling our attention — and the best bait is social networking. Facebook, Instagram and Twitter have drawn us closer round the campfire of our shared humanity. Yet, they come with costs, both personal and political. Users must decide if the benefits of these sites outweigh their costs.” Humans, he opines, “have a fundamental need to belong and a fundamental desire for social status. As a result, our brains treat information about ourselves like a reward. When our behaviour is rewarded with things such as food or money, our brain’s ‘valuation system’ activates. Much of this system is also activated when we encounter self-relevant information. Such information is hence given great weight.”

McCarthy-Jones makes a number of interesting suggestions as to how we might benefit but not get consumed: “Companies could redesign their sites to mitigate the risk of addiction. They could use opt-out default settings for features that encourage addiction and make it easier for people to self-regulate their usage. However, some claim that asking tech firms ‘to be less good at what they do feels like a ridiculous ask’. So government regulation may be needed, perhaps similar to that used with the tobacco industry. Users could also consider whether personal reasons are making them vulnerable to problematic use. Factors that predict excessive use include an increased tendency to experience negative emotions, being unable to cope well with everyday problems, a need for self-promotion, loneliness and fear of missing out.” Users could also empower themselves. “It is already possible to limit time on these sites using apps such as Freedom, Moment and StayFocusd.”

Tacking ‘Useful Enemies’

Interesting to see that the BBC has just launched a new initiative to tackle some of these problems: it is outlined by its Media Editor, Amol Rajan, who sees fake news as “a useful enemy” for people like Trump, who use it to “motivate his base, obstruct scrutiny of his policies, and potentially undermine his opponents.” It also helps “ambitious politicians keen to flaunt their digital knowledge and boost their profile, the fake news phenomenon is a handy option,” and for “digital firms keen to display a social conscience, fake news provides an opportunity to clarify their purpose — ‘we're technology companies, not media companies’… even if their platforms are zones in which foreign autocrats interfere in domestic matters. And for the mainstream media, which is suffering from a crisis of trust, seeing traditional business models turn into air… the comparison with fake news is a way of making the case for professional journalists who go after the truth.”

The BBC initiative is focusing on young people and involves online mentoring and school visits to “promote better judgement about misinformation online… Teenagers have their news chosen for them by friends on some social media platforms, or chosen by those lovely people at Snapchat, according to their social preferences. They snack on news throughout the day (and) occasionally dip into the permanent stream of information on their mobiles and feeds.” To resist the obvious dangers, Rajan says, “requires knowledge (of the actual state of the world), intellectual tools (scrutiny to determine truth from falsehood), and courage (to call out liars). This trinity, when combined, produces news literacy — and it is this, rather than fake news itself, that the BBC's new initiative is aiming to promote.”

The Question of Trust

And finally there’s the question of trust… If you couple the above findings with those of the Edelman Trust Barometer (published earlier this year) an alarming picture emerges — perhaps another indication of how, as the world grows darker, we have become more and more absorbed in our own (safe) eco-chambers. The Barometer exposes just what little trust people today have in business, government, NGOs and the media; and with this collapse of trust, the majority of those polled: “now lack full belief that the overall system is working for them. In this climate, people’s societal and economic concerns, including globalization, the pace of innovation and eroding social values, turn into fears, spurring the rise of populist actions now playing out in several Western-style democracies.” Its conclusion is that: “To rebuild trust and restore faith in the system, institutions must step outside of their traditional roles and work toward a new, more integrated operating model that puts people — and the addressing of their fears — at the center of everything they do.” And that’s quite a challenge!

Last night (5 Feb) The Institute of Art and Ideas

debated ‘The

Future of the Post-Truth World’ at the ICA in London. Speakers included Steve

Fuller, Rae Langton and Hilary Lawson, ably managed by Orwell prize-winning

journalist Polly Toynbee. Much ground was covered but one comment caught my

attention: reference to a BBC interview

with Sean Spicer, President Trump’s first Press Secretary. It concerned Spicer’s

now infamous description of the number of people who witnessed Trump’s inauguration.

The media made great play of the fact that the numbers attending were significantly fewer than at Obama’s inauguration. But one of the speakers pointed out that what had been meant by Spicer was the global media coverage not those present. Whether this is true or a convenient explanation of events is not clear. But it does raise the question of how you set the frame, and this was a major focus in the debate between the speakers. It's easy for both sides to believe they are right, and this is a recipe for misunderstanding and polarisation.

There's a Lot Going On!

There has been an enormous increase in the coverage of fake news

and disinformation in the media in recent months. I’ve already identified and logged 24 items in the first 5 weeks of 2018, and this follows 217 items in 2017; 36 in 2016; 11

in 2015; 5 in 2014; and 3 in 2013. I haven't carried out a systematic study of publications on fake news / misinformation worldwide; these are simply items that I personally have

found interesting. There has also been a rapid increase in the number of initiatives

taken by state and other actors to address the problem: I log these in the Critical Information

database and have so far identified over 230 initiatives, no less than 70 in

the UK.

These figures give an indication of size and complexity of the problems that we are facing.

It is to be

welcomed that the topic of misinformation is getting more coverage, and that more people are becoming concerned about the consequences of lies and deception. What we need now is effective methods of neutralising the problem, and this is still a long way off...

Recent Developments

I’ve not been able to comment on developments for a couple of months because of other commitment (now virtually complete), but I have been following events. Here are some of main issues that have been in the media — I’ve identified just one representative article per topic:

Internet

· the repeal in the US of legislation on net neutrality ;

· the issue of controlling unaccountable algorithms ;

Social Media

· Obama warning against 'irresponsible' social media use;

· a prominent former Facebook exec saying social media is ‘ripping apart society’;

· Facebook conceding that using it (a lot) might make you feel bad; also cutting back on news (to give us more time with advertisers);

· calls for tech giants to face taxes over extremist content;

· LinkedIn revealed to have been ‘hosting jihadist lectures’;

Fake News & Conspiracy Theories

· the UK Government announcing the setting up of an anti-fake news unit ;

· the appearance of Deepfake , an app that enables hackers to face-swap Hollywood stars into pornography films;

· climate researchers bemoaning the fact that their work was turned into fake news;

· people believing conspiracy theories about mass shootings in the US being staged;

· how commercial brands secretly buy their way into legitimate publications with material that pretends to be objective;

Hate Speech & Counter-Extremism

· campaigner Sara Khan to lead new UK counter-extremism body;

· new report on hate speech / anti-Muslim messaging on social media [ Hope Not Hate ]

· England (and the US) opting out of the OECD’s Programme for International Student Assessment on the issue of tolerance — PISA is a triennial international survey of some 72 countries which aims to evaluate education systems worldwide by testing the skills and knowledge of 15-year-old students;

Information Warfare

· growing concern in the UK over information warfare — British Army Chief calling for investment to keep up with Russia.

We hope to cover these issues in the next few months.

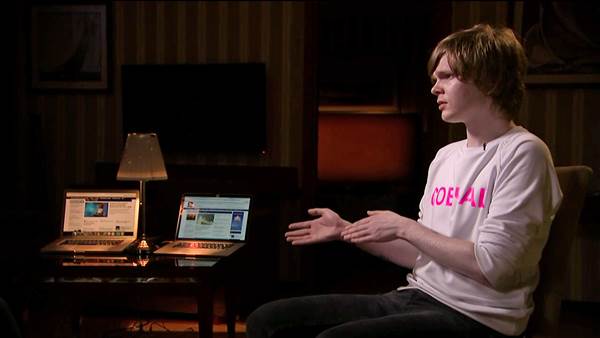

Vitaly Bespalov was once one of hundreds of Russians employed to pump out misinformation online at the Internet Research Agency , Putin’s troll factory in St Petersburg. His story was told this week on the BBC's Profile * and also on NCB News.

The IRA, it appears, is housed in a four-story concrete

building on Savushkina Street in St Petersburg, which is “secured by camouflaged guards and

turnstiles.” There, bloggers and former journalists work “around the clock to

create thousands of incendiary social media posts and news articles to meet

specific quotas.” Those on the third level blog to undermine Ukraine and

promote Russia; those on the first, to create news articles that refer to these

blog. Workers on the third and fourth floor post comments on the stories and

other sites under fake identities, pretending they were from Ukraine. And the

marketing team on the second floor weaves all of this misinformation into

social media.

Even though each floor works on material the other created, according to Vitaly "they don't have any contact with each other inside the building except for in the cafeteria or on smoke breaks…"

The Oxford Internet Institute has been studying "the purposeful distribution of misleading information over social media networks", and in July released a working paper on the topic which argues that computational propaganda (as it is known) is today "one of the most powerful new tools against democracy." The report makes chilling reading. It is based on research across 9 countries (Brazil, Canada, China, Germany, Poland, Taiwan, Russia, Ukraine & the US) carried out “during scores of elections, political crises, and national security incidents.”

Among the study's main findings are that “in authoritarian countries, social media platforms are a primary means of social control”, and in democracies, they are “actively used for computational propaganda either through broad efforts at opinion manipulation or targeted experiments on particular segments of the public." In every country, it says, "we found civil society groups trying, but struggling, to protect themselves and respond to active misinformation campaigns.”

“The most powerful forms of computational propaganda involve both algorithmic distribution and human curation—bots and trolls working together… learning from and mimicking real people so as to manipulate public opinion across a diverse range of platforms and device networks... One person, or a small group of people, can use an army of political bots on Twitter to give the illusion of large-scale consensus... Regimes use political bots, built to look and act like real citizens, in efforts to silence opponents and to push official state messaging. Political campaigns, and their supporters, deploy political bots—and computational propaganda more broadly—during elections in attempts to sway the vote or defame critics. Anonymous political actors harness key elements of computational propaganda such as false news reports, coordinated disinformation campaigns, and troll mobs to attack human rights defenders, civil society groups, and journalists.”

The paper concludes that social media firms “may not be creating this nasty content, but they are the platform for it. They need to significantly redesign themselves if democracy is going to survive.” In this respect it is good to see Google announcing (21 Nov) that it is going to ‘derank’ stories from Kremlin-owned publications RT ( Russia Today ) and Sputnik in response to allegations about Russia meddling in western democracies. Not surprising the Kremlin says it is incensed by the move, and denies any knowledge of the activities of the St Petersburg troll factory. It has suggested that reports that it exists might be fake.

Apparently Vitaly Bespalov no longer believes anything he reads on social media. It would be helpful if more people could adopt this attitude -- and the social media platforms do more to remove the poison from people's phones and desk tops.

One can't help wondering where Vitaly is today, we assume he’s in hiding — that is, if he’s a real person and not the product of a fertile western imagination/dirty trick.

* The actually BBC profiled the man accused of funding the St Petersburg troll factory, Yevgeny

Prigozhin, who, as it says "has moved from jail to restaurateur and

close friend of President Putin, but precious little is known about his

personal life."