2017-29: Online Information & Fake News

- by Mike Flood

- •

- 24 Oct, 2017

- •

In 2017 the proportion of adults in the UK consuming news online exceeded those who watched TV news (74% versus 69%). This was one of many interesting facts contained in a POSTnote [No 559], ‘Online Information & Fake News’, published in July by the Parliamentary Office of Science & Technology.

The POSTnote considers inter alia how people access news online, how algorithms and social networks influence the content that users see, and options for mitigating any negative impact. In particular, it explores:

- how people in the UK access and share news online

- the effects of filtering

- the factors driving fake news and its effects

- approaches to addressing the challenges

One interesting idea briefly mentioned in the POSTnote is that put forward by Sander Van der Linden, Director of the Cambridge Social Decision-Making Lab, who argues that it may be possible to ‘inoculate’ against misinformation by pre-emptively warning people about politically motivated attempts to spread misinformation.

Van der Linden explained the idea in a Sunday Lecture he

gave in Conway Hall in May, entitled ‘Beating the Hell Out of Fake News’. He

says he started thinking about the vaccine metaphor when he “came across some

interesting work that showed how models from epidemiology could be adapted to

model the viral spread of misinformation, i.e. how one false idea can rapidly

spread from one mind to another within a network of interconnected individuals.

This led to the idea that it may be possible to develop a ‘mental’ vaccine.”

His team tested the idea in a study that focused on disinformation about climate change. There was “a debunked petition that formed the basis of a viral fake news story that claimed that thousands of scientists had concluded that climate change is a hoax.” The challenge was to see if it would be possible to “inoculate the public against this bogus petition”, and to some extent, he succeeded. “Vaccines do not always offer full protection,” he notes, “but the gist of inoculation is that we need to play offence rather than defence and that it’s better to prevent than cure. It is one tool, among many, to help each other navigate this brave new world.”

Last night (5 Feb) The Institute of Art and Ideas

debated ‘The

Future of the Post-Truth World’ at the ICA in London. Speakers included Steve

Fuller, Rae Langton and Hilary Lawson, ably managed by Orwell prize-winning

journalist Polly Toynbee. Much ground was covered but one comment caught my

attention: reference to a BBC interview

with Sean Spicer, President Trump’s first Press Secretary. It concerned Spicer’s

now infamous description of the number of people who witnessed Trump’s inauguration.

The media made great play of the fact that the numbers attending were significantly fewer than at Obama’s inauguration. But one of the speakers pointed out that what had been meant by Spicer was the global media coverage not those present. Whether this is true or a convenient explanation of events is not clear. But it does raise the question of how you set the frame, and this was a major focus in the debate between the speakers. It's easy for both sides to believe they are right, and this is a recipe for misunderstanding and polarisation.

There's a Lot Going On!

There has been an enormous increase in the coverage of fake news

and disinformation in the media in recent months. I’ve already identified and logged 24 items in the first 5 weeks of 2018, and this follows 217 items in 2017; 36 in 2016; 11

in 2015; 5 in 2014; and 3 in 2013. I haven't carried out a systematic study of publications on fake news / misinformation worldwide; these are simply items that I personally have

found interesting. There has also been a rapid increase in the number of initiatives

taken by state and other actors to address the problem: I log these in the Critical Information

database and have so far identified over 230 initiatives, no less than 70 in

the UK.

These figures give an indication of size and complexity of the problems that we are facing.

It is to be

welcomed that the topic of misinformation is getting more coverage, and that more people are becoming concerned about the consequences of lies and deception. What we need now is effective methods of neutralising the problem, and this is still a long way off...

Recent Developments

I’ve not been able to comment on developments for a couple of months because of other commitment (now virtually complete), but I have been following events. Here are some of main issues that have been in the media — I’ve identified just one representative article per topic:

Internet

· the repeal in the US of legislation on net neutrality ;

· the issue of controlling unaccountable algorithms ;

Social Media

· Obama warning against 'irresponsible' social media use;

· a prominent former Facebook exec saying social media is ‘ripping apart society’;

· Facebook conceding that using it (a lot) might make you feel bad; also cutting back on news (to give us more time with advertisers);

· calls for tech giants to face taxes over extremist content;

· LinkedIn revealed to have been ‘hosting jihadist lectures’;

Fake News & Conspiracy Theories

· the UK Government announcing the setting up of an anti-fake news unit ;

· the appearance of Deepfake , an app that enables hackers to face-swap Hollywood stars into pornography films;

· climate researchers bemoaning the fact that their work was turned into fake news;

· people believing conspiracy theories about mass shootings in the US being staged;

· how commercial brands secretly buy their way into legitimate publications with material that pretends to be objective;

Hate Speech & Counter-Extremism

· campaigner Sara Khan to lead new UK counter-extremism body;

· new report on hate speech / anti-Muslim messaging on social media [ Hope Not Hate ]

· England (and the US) opting out of the OECD’s Programme for International Student Assessment on the issue of tolerance — PISA is a triennial international survey of some 72 countries which aims to evaluate education systems worldwide by testing the skills and knowledge of 15-year-old students;

Information Warfare

· growing concern in the UK over information warfare — British Army Chief calling for investment to keep up with Russia.

We hope to cover these issues in the next few months.

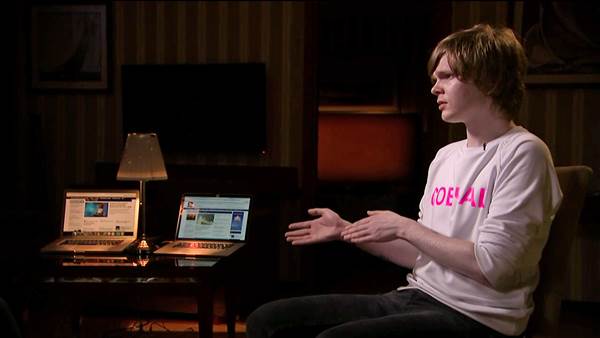

Vitaly Bespalov was once one of hundreds of Russians employed to pump out misinformation online at the Internet Research Agency , Putin’s troll factory in St Petersburg. His story was told this week on the BBC's Profile * and also on NCB News.

The IRA, it appears, is housed in a four-story concrete

building on Savushkina Street in St Petersburg, which is “secured by camouflaged guards and

turnstiles.” There, bloggers and former journalists work “around the clock to

create thousands of incendiary social media posts and news articles to meet

specific quotas.” Those on the third level blog to undermine Ukraine and

promote Russia; those on the first, to create news articles that refer to these

blog. Workers on the third and fourth floor post comments on the stories and

other sites under fake identities, pretending they were from Ukraine. And the

marketing team on the second floor weaves all of this misinformation into

social media.

Even though each floor works on material the other created, according to Vitaly "they don't have any contact with each other inside the building except for in the cafeteria or on smoke breaks…"

The Oxford Internet Institute has been studying "the purposeful distribution of misleading information over social media networks", and in July released a working paper on the topic which argues that computational propaganda (as it is known) is today "one of the most powerful new tools against democracy." The report makes chilling reading. It is based on research across 9 countries (Brazil, Canada, China, Germany, Poland, Taiwan, Russia, Ukraine & the US) carried out “during scores of elections, political crises, and national security incidents.”

Among the study's main findings are that “in authoritarian countries, social media platforms are a primary means of social control”, and in democracies, they are “actively used for computational propaganda either through broad efforts at opinion manipulation or targeted experiments on particular segments of the public." In every country, it says, "we found civil society groups trying, but struggling, to protect themselves and respond to active misinformation campaigns.”

“The most powerful forms of computational propaganda involve both algorithmic distribution and human curation—bots and trolls working together… learning from and mimicking real people so as to manipulate public opinion across a diverse range of platforms and device networks... One person, or a small group of people, can use an army of political bots on Twitter to give the illusion of large-scale consensus... Regimes use political bots, built to look and act like real citizens, in efforts to silence opponents and to push official state messaging. Political campaigns, and their supporters, deploy political bots—and computational propaganda more broadly—during elections in attempts to sway the vote or defame critics. Anonymous political actors harness key elements of computational propaganda such as false news reports, coordinated disinformation campaigns, and troll mobs to attack human rights defenders, civil society groups, and journalists.”

The paper concludes that social media firms “may not be creating this nasty content, but they are the platform for it. They need to significantly redesign themselves if democracy is going to survive.” In this respect it is good to see Google announcing (21 Nov) that it is going to ‘derank’ stories from Kremlin-owned publications RT ( Russia Today ) and Sputnik in response to allegations about Russia meddling in western democracies. Not surprising the Kremlin says it is incensed by the move, and denies any knowledge of the activities of the St Petersburg troll factory. It has suggested that reports that it exists might be fake.

Apparently Vitaly Bespalov no longer believes anything he reads on social media. It would be helpful if more people could adopt this attitude -- and the social media platforms do more to remove the poison from people's phones and desk tops.

One can't help wondering where Vitaly is today, we assume he’s in hiding — that is, if he’s a real person and not the product of a fertile western imagination/dirty trick.

* The actually BBC profiled the man accused of funding the St Petersburg troll factory, Yevgeny

Prigozhin, who, as it says "has moved from jail to restaurateur and

close friend of President Putin, but precious little is known about his

personal life."